Features

Tools Documentation

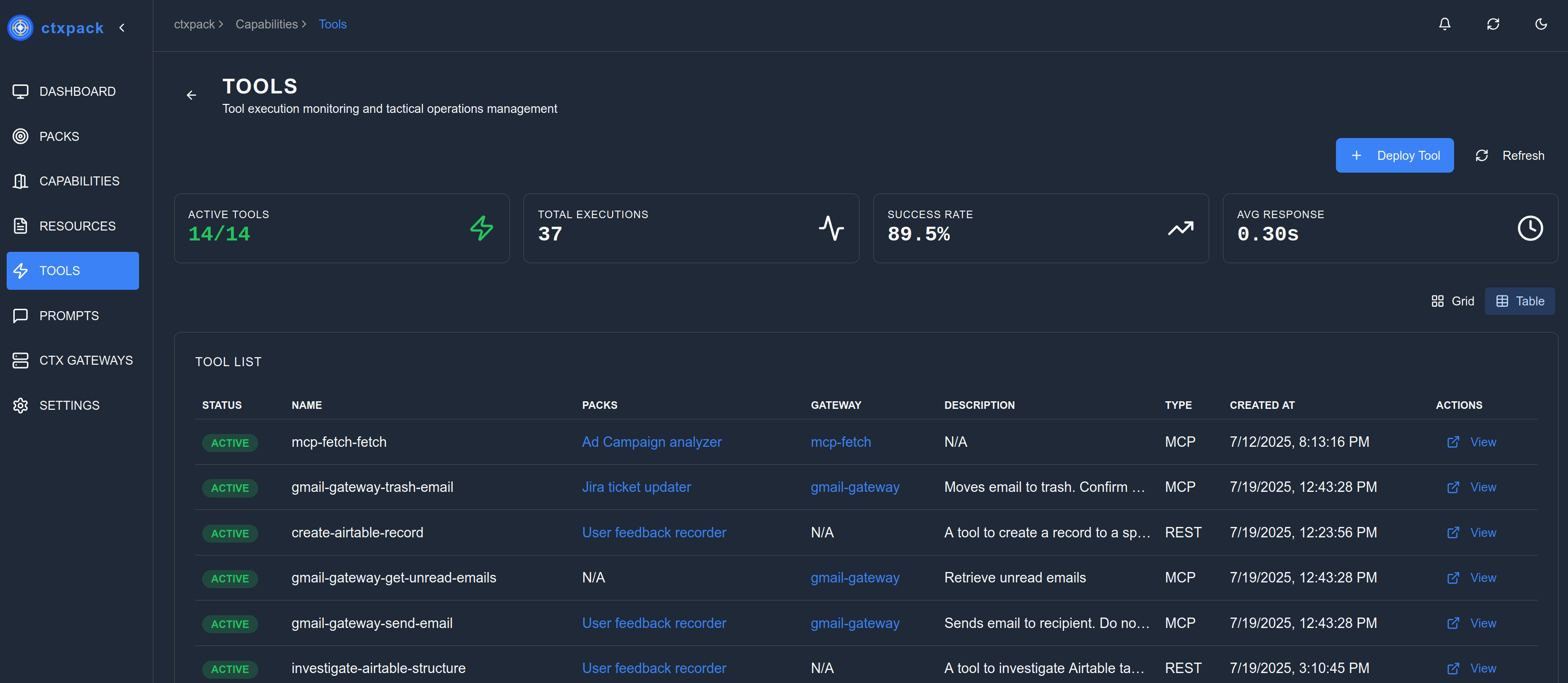

Overview

The Tools section of the application allows users to deploy new operational tools to the network. You can configure any API endpoint that is reachable to return the wanted data. These tools can further be added to individual packs that allow you to use them within your AI Agents or your manual LLM conversational workflows.

To see more about what tools are and how best to rationalize them, take a look at the What are tools section.

Tools in Ctxpack

You can introduce tools into the Ctxpack platform on two different ways:

- Registering an existing MCP server as a Ctx Gateway

All tools that a registered Ctx Gateway (a remote MCP server) exposes, are automatically registered as tools into the Ctxpack application. This allows you to eventually pick and choose individual tools from wider ranging and bloated MCP servers to construct targeted and neat context packs.

- Deploying a Ctxpack tool by connecting into an existing API endpoint

You can register any reachable API as a tool into Ctxpack. These can be standard REST APIs producing JSON responses or even more complex XML or HTML responses. Creating a tool in Ctxpack involves several layers of configuration that ensure it works reliably within AI workflows. The basic information layer defines how the tool presents itself to AI systems. It is important to give your tools descriptive names and descriptions since that is how determine LLM client or AI Agents decide when and how to use it.

Getting Started

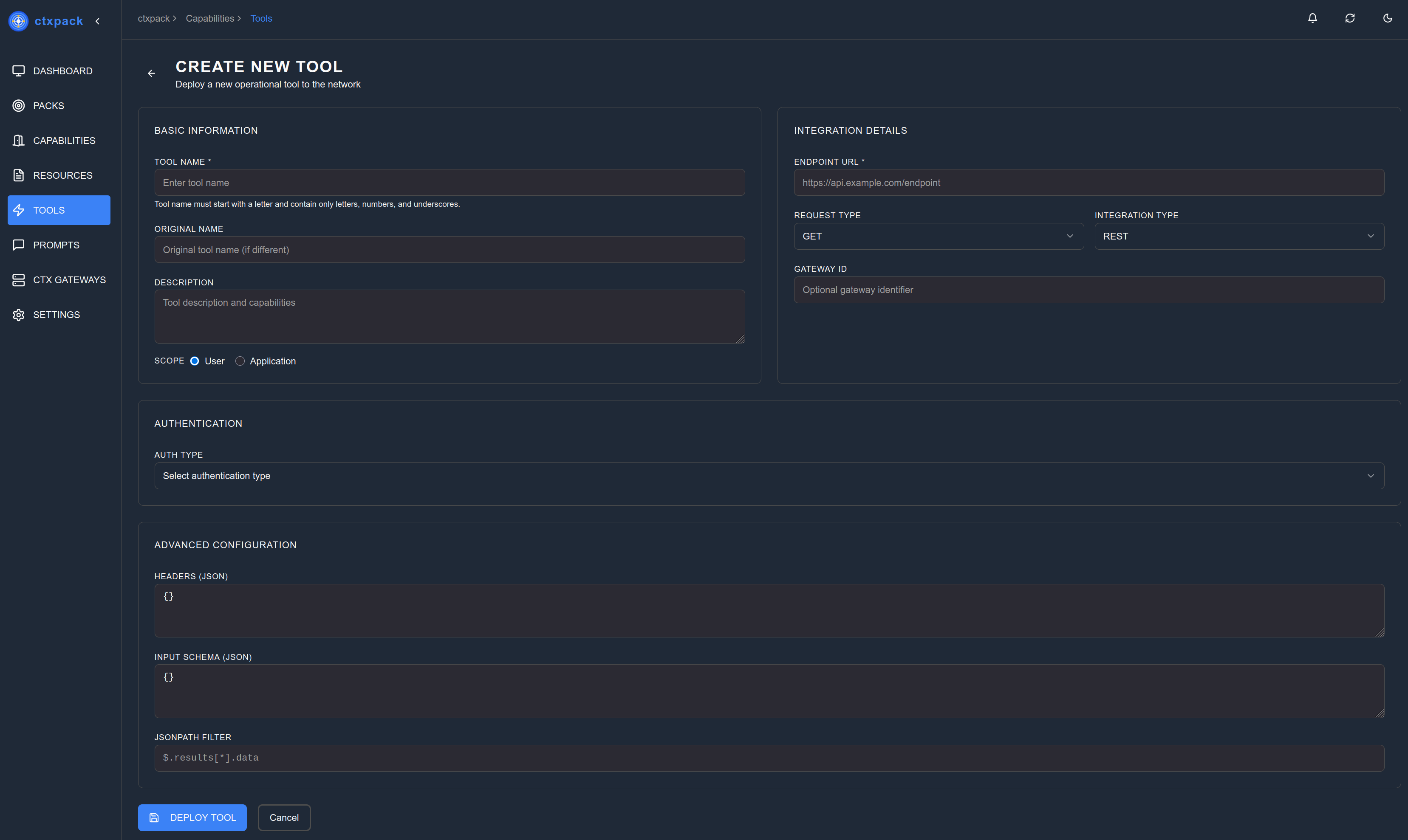

Creating your first tool

The "Tools/create" section allows users to register (or "deploy") a new tool to the application. Within this section you are able to give the tool a name, description, and its integration details. These include the endpoint URL, request type (GET, POST, etc.), and integration protocol. This is where you connect the tool to the actual service it will interact with.

Authentication configuration ensures secure access to protected resources. Ctxpack supports various authentication types, allowing tools to work with systems that require API keys or other credential mechanisms.

The advanced configuration options provide fine-grained control over how the tool operates. Custom headers can be added for specific API requirements, input schemas define what parameters the tool expects, and JSONPath filters can extract specific data from responses. These features allow you to tailor each tool's behavior to match both the target API's requirements and your AI workflows' needs.

Best practices

Describe your tools properly

Give your tools descriptive names. These names should usually match the action that is taken by the end user or AI Agent when they are request their LLM Client to do some work. Some examples of this would be something like the following:

Use case: User wants to get marketing content calendar entries from Airtable Tool name: retriever-content-calendar-entries

Additionally it is extremely useful to write a clear description on the action what the tool does and how to use it. This works nicely as a structured set of instructions. A structure similar to below is usually helpful for the Agent or LLM to understand when to use the tool.

<usecase>

Explains WHAT the tool does and WHEN to use it

</usecase>

<instructions>

Covers HOW to use it correctly, with specifics about parameters and expected inputs

</instructions>

Define the input schemas

Adding input schemas to your tools is optional but helps the LLM Client or AI Agent immensely when they are trying to use it. The easiest way to describe the schema is to use JSON Schema format, which is something that most REST API spec documentation tools expose natively. If you are struggling to find good documentation for your endpoint, you can always ask an LLM to generate a generic JSON schema out of a sample response you are giving to it. They are really good at that.

Return only the necessary information

If the endpoint you are integrating with provides a vast amount of data in their response, the AI Agent or LLM can get confused when using your tool. Or worse yet, run out of tokens if using smaller models. You can define a JSONPAth filter to retrieve only the wanted relevant data from the response. The smaller and more targeted the response, the better the LLM or AI Agent understands it.